Assignment feedback generator

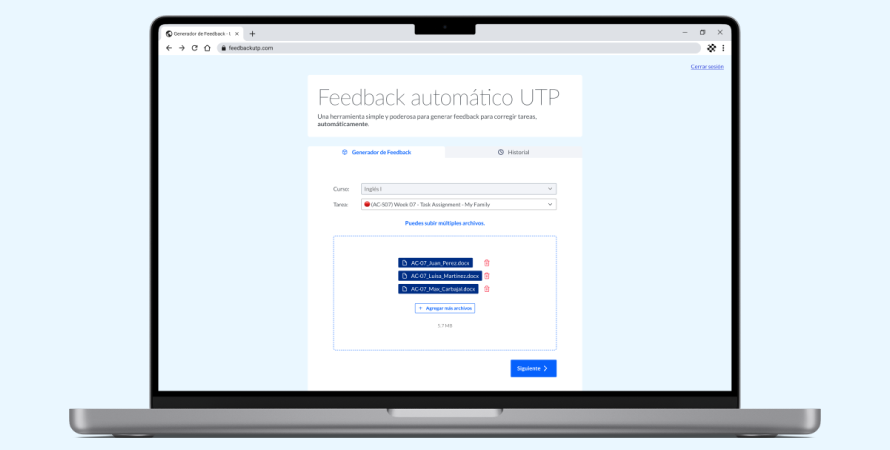

At UTP, I was tasked to create the interfaces and workflow for a tool designed by our Technology team to facilitate the review of student assignments by instructors of online courses. This would, ideally, free up time from having to do tedious tasks (reviewing many short/mid length papers and written assignments that often display similar error patterns) and let instructors use this time intofor more high-value tasks, such as 1 on 1 sessions with students or having specific sessions to reinforce topics the class might be struggling with.

Initially, the generator was created for ESL teachers to use, but we also wanted it to be scalable to 100+ courses.

Despite that pretty massive ask, the initial desired feature list for the generator was fairly simple:

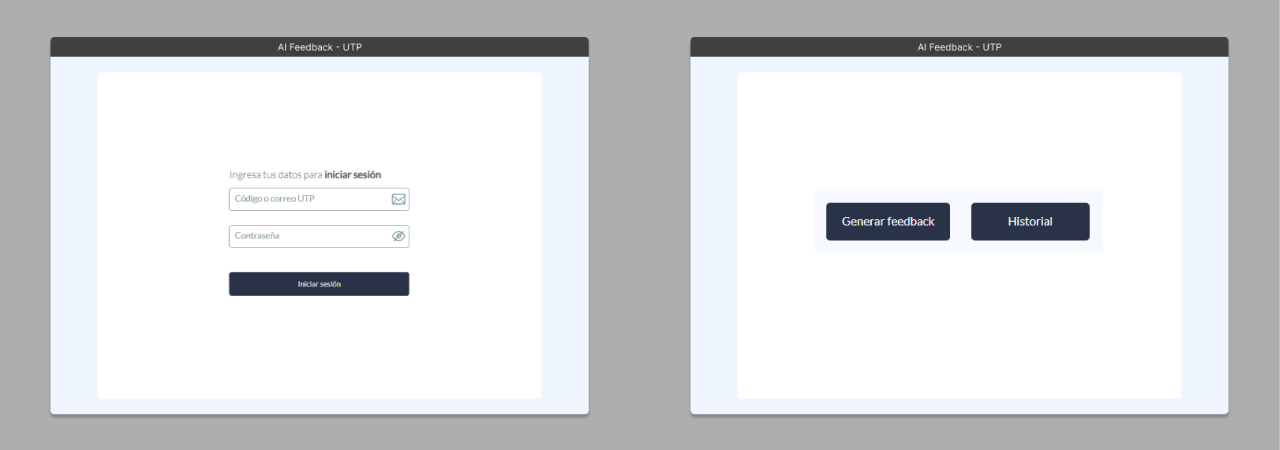

- It should be easy to use for instructors (about 40+ years old, without much knowledge of web tools).

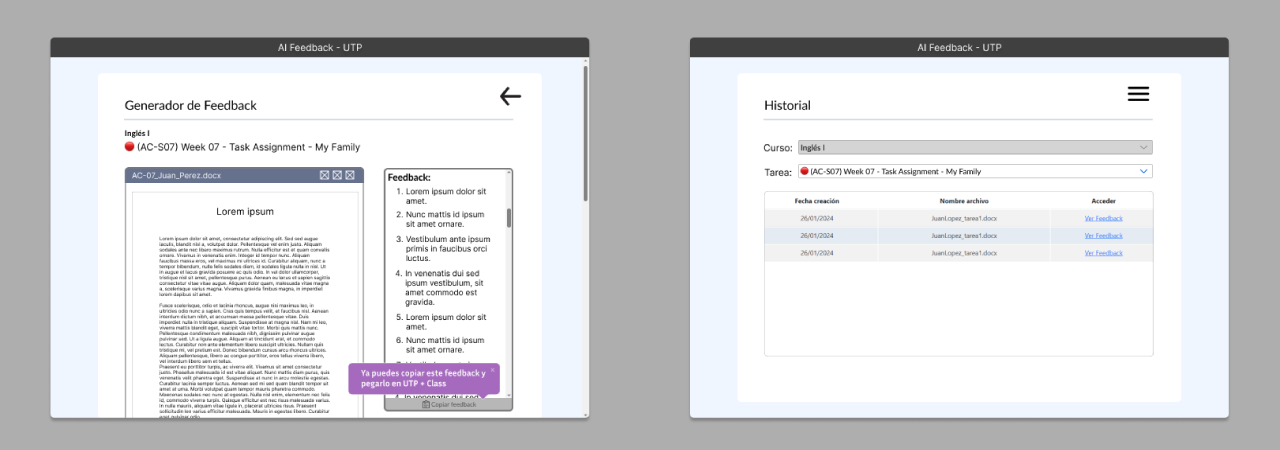

- It should generate feedback for multiple files/assignments at the same time.

- It should let users view, copy and download individual feedback for each file immediately after its generation.

- It should allow viewing, copying and downloading previously generated feedback - individually and in bulk.

- It should have a basic UI so that the basic components can eventually be adapted and integrated into UTP+Class without significant re-design.

My team had already designed a product flowchart for the generator - my job was to turn it into clean interfaces and suggest extra improvements.